As heartbreaking as it seems, #Nintendo’s pricing for the #Switch2 and its games is not an increase over previous years. For more details on the why and how of that, check out Nintendo Forecasts' video on the matter. #videogames #inflation #economics www.youtube.com/watch

Reading

BIG-ASS SWORD

Andreas Butzbach

video games

capitalism

Microposts

movies

animation

Microposts

I’m not sure the downside indicated is an actual downside. From all indications, the #LiveAction How To Train Your Dragon is fantastic, bucking the trend of such remakes. I just hope the flying scenes evoke the same emotions as the originals. #movies #animation #httyd www.slashfilm.com/1825943/h…

capitalism

technology

Microposts

Um… yeah. Realign your perspective on #AI. #Capitalism doesn’t intend to leverage AI for the betterment of humanity. Instead, they plan on using it in conjunction with a range of other technologies to eliminate humans from the money-making process. #tech arstechnica.com/tech-poli…

politics

video games

Microposts

#TFG’s #tariffs have prompted #Nintendo to pause pre-orders for the #Switch2 in the #US which were going to start on April 9th so Nintendo can evaluate the rapidly changing economic landscape. Fans are already unhappy about Switch 2 pricing. #videogames #news kotaku.com/switch-2-…

music

Microposts

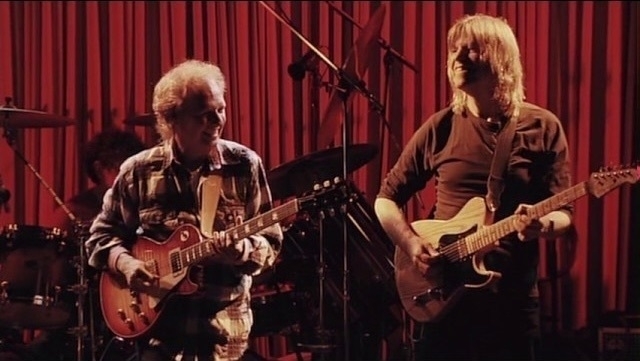

#NowPlaying @ Nothing Manor: Lee Ritenour & Mike Stern w/ The Freeway Band Live at Blue Note Tokyo (2011). John Beasley on keys, Melvin Lee Davis on bass, and the incomparable Simon Phillips on the drums with an epic solo at around 54 minutes. #music #jazz #jazzfusion www.youtube.com/watch

capitalism

Microposts

Ford brought a version of the #Ford #Sierra over here and called it the #Merkur XR4Ti. Despite being better, it barely sold thanks to shitty dealerships upselling buyers to shitty #Lincolns for higher commissions. This is why we can’t have nice things. #cars #capitalism www.youtube.com/watch

video games

Microposts

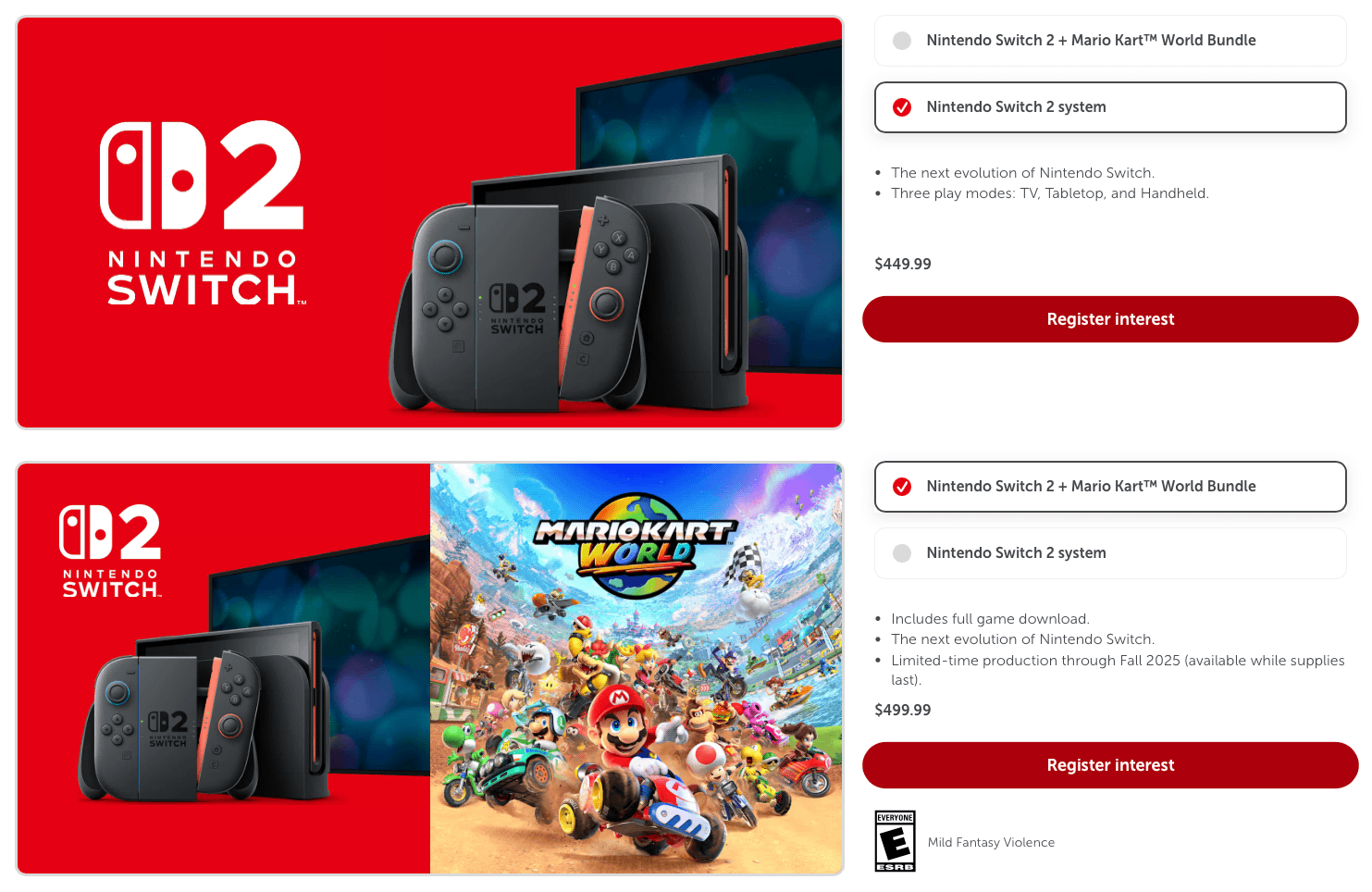

#Nintendo #Switch2 pricing for the US:

Switch 2: $449 Switch 2 + Mario Kart World: $499

The systems will be available starting June 5th. Accessory prices (i.e., Pro Controller, Switch 2 Camera, etc) not yet available. #videogames #MarioKart #NorthAmerica

movies

Microposts

Wow. This really sucks. Val Kilmer has died at the age of 65 of pneumonia. There’s not much more, but it’s terrible. #hollywood #acting #movies #RIP www.reuters.com/world/us/…

apple

technology

howto

Microposts

Updates are available across the board for #Apple devices, including the #Mac, #iPhone, #iPad, #AppleWatch, #AppleTV, and more. watchOS 11.4 was pulled yesterday, but has now been released. Mr. Macintosh has details about the updates & OCLP support. #tech #howto www.youtube.com/watch

video games

Microposts

If you’re a #tech #dweeb like me, you’ll love this new video from the totally legitimate #TechDweeb with an important announcement! #AprilFools #videogames #RetroGameCorps. www.youtube.com/watch

capitalism

howto

Microposts

This appears to be a thing now. #amazon #deleteamazon #capitalism #corporatebacklash #howto www.engadget.com/big-tech/…

movies

animation

Microposts

Good news, everyone! Okay, just #animation lovers, but it’s still great news! Warner Bros. CEO Zaslav vaulted Coyote VS. ACME for tax reasons, but it’s now being released by Ketchup, makers of The Day The Earth Blew Up. It’s official, but we don’t have a date yet. #movies www.slashfilm.com/1823161/c…

technology

howto

retro technology

Microposts

I’m definitely a fan of #RetroComputing, but my allegiance clearly lies with #Apple rather than #Microsoft. My old PCs run #Linux or #Haiku, but I get what retro #Windows peeps want. So, if you want to install #Windows98 quickly, here you go. #tech #howto #utilities boingboing.net/2025/03/3…

television

scifi

Microposts

If you’ve been crouching in a closet moaning to yourself about how long it’s taking to get Season 3 of #StarTrekStrangeNewWorlds, it seems we might have an official date from SkyShowtime Netherlands. It’s August 1st. That’s it for now. #StarTrek #STSNW #scifi www.youtube.com/watch

music

Microposts

#NowPlaying @ Nothing Manor: Toy Matinee (1990). Patrick Leonard & Guy Pratt formed a band & invited #KevinGilbert to join them on vocals, with Pat & Kevin writing most of the songs & Guy contributing to half. One of the best #pop albums ever. #music www.youtube.com/watch

music

Microposts

#NowPlaying @ Nothing Manor: Envy of None - Stygian Wavz (2025). Alex Lifeson. Sounding nothing like the legendary band #Rush, this sophomore release from EON is melancholic and exhilarating. #music #synthpop #progpop #poprock youtube.com/playlist

music

Microposts

#NowPlaying @ Nothing Manor: Pure Reason Revolution - Coming Up To Consciousness Deluxe (2025). Just wow! This British #ProgRock, #AltProg, #ArtRock, #NeoProg band formed back in 2003 and I’m just hearing about them. #music www.youtube.com/watch

music

Microposts

#NowPlaying @ Nothing Manor: Death Cab for Cutie - Kintsugi (2015). Following the departure of OG guitarist Chris Walla, Ben Gibbard would remake DCfC like the Japanese artform of repairing broken pottery with gold known as Kintsugi. #music #altrock www.youtube.com/watch

music

Microposts

#NowPlaying @ Nothing Manor: Death Cab for Cutie - Codes and Keys Deluxe (2011). I found DCfC in 2005 when their Plans album started hitting AAA radio stations and have loved them since. Great album. #music #altrock #collegeradio www.youtube.com/watch

movies

satire

comics & manga

Microposts

1999’s Mystery Men was a box office bomb that deserved much better, but does enjoy strong cult status. I have it on Blu-Ray and adore it. I’d always wondered what happened to the Herkimer Battle Jitney. Check the first comment. #movies #MysteryMen #superheroes www.youtube.com/watch

Microposts

Has anyone else noted that in the Tomodachi Life: Living the Dream trailer in #Nintendo’s March 27th #Direct for the #Switch that the guy sleeping on the deck chair on the beach is farting? The little cloud is even tinted green FFS!! #videogames www.youtube.com/watch

Microposts

After some rather significant technical gymnastics some very smart people (myself not included) seem to have employed a fix to get #Microblog to #Mastodon crossposting working again with my setup! Here now is a test post to see how it works :) Documentation sometime next week for the benefit of other admins, as long as everything remains stable 😁🤞 #socialmedia #serveradmin #tech

politics

rights

security

Microposts

PUBLIC SERVICE ANNOUNCEMENT: If you are #traveling you need to be aware of your #rights and those that could be taken from you when on the road. Axios has a helpful guide to understand how to secure your data. #cellphones #security #internet #politics #uspol www.axios.com/2025/03/2…

animation

Microposts

#Panasonic has commissioned the creation of an Original Video #Animation, commonly known as an #OVA. It’s not for marketing, per se, but a fairy tale promoting a belief in ourselves to perceive the world more positively. #OsakaKansaiExpo #2025 #LandOfNOMO www.youtube.com/watch

video games

Microposts

One of the biggest surprises from yesterday’s #NintendoDirect was the upcoming new featured called #VirtualGameCard. For an explanation, read the brief article. I will note that this is way late but also right on time for the #Switch2 direct next week. #videogames arstechnica.com/gaming/20…